Landscape Metrics

This page was created to provide an additional option to quantify landscape configuration using R. The landscape subject to analysis is powerful and can represent any spatial phenomenon. R will quantify, sample, show, and the areal extent and spatial configuration of patches within a landscape. We will cover a few important steps for data analysis and visualization. Firstly, an introduction to how to use the package, the functions it has, and how to extract the information. Secondly, we will take that information and create plots ready for publications so we can be more familiar with R and use some of the best features we can get from the program. All the code will be provided with a short explanation of what each line does.

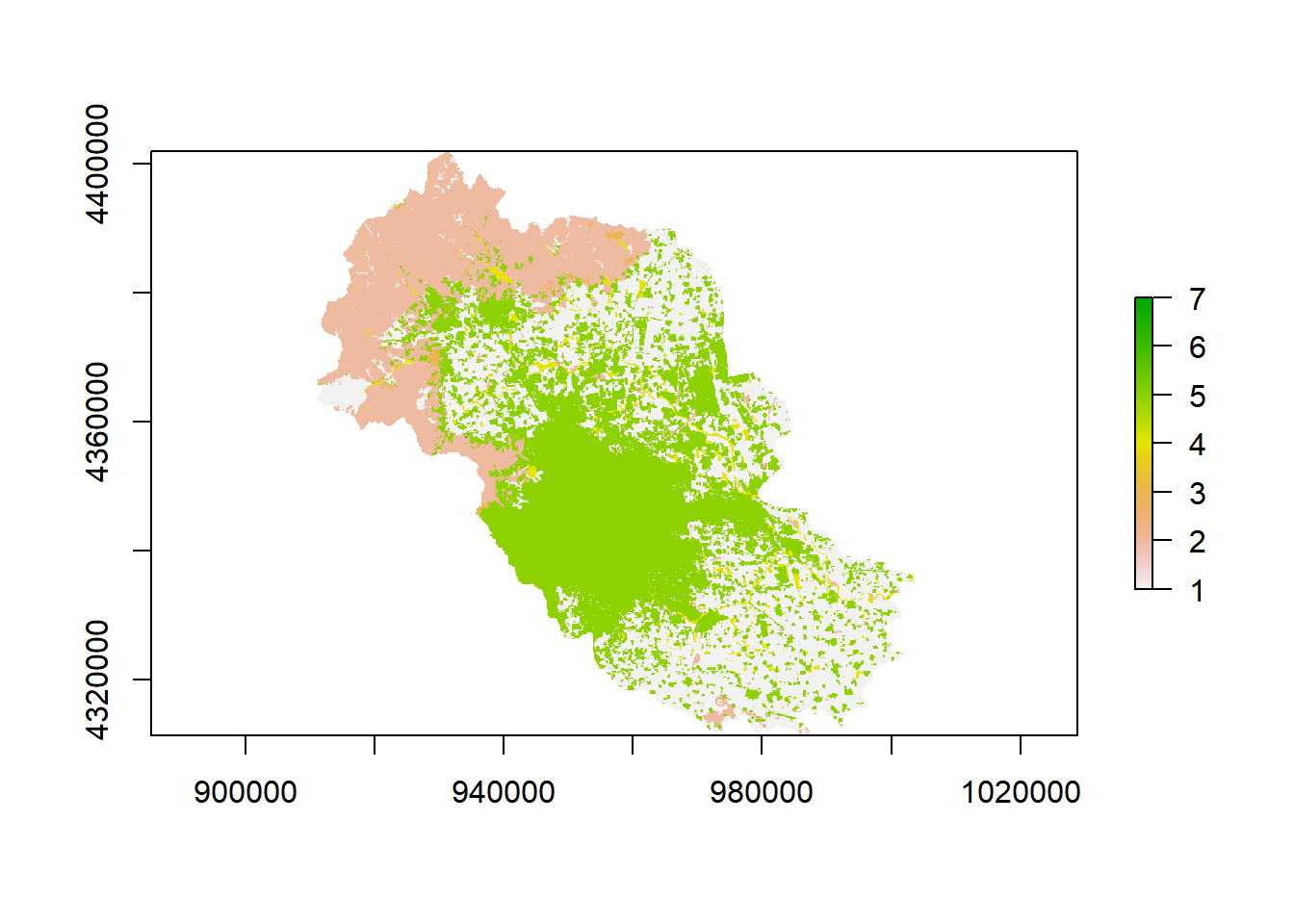

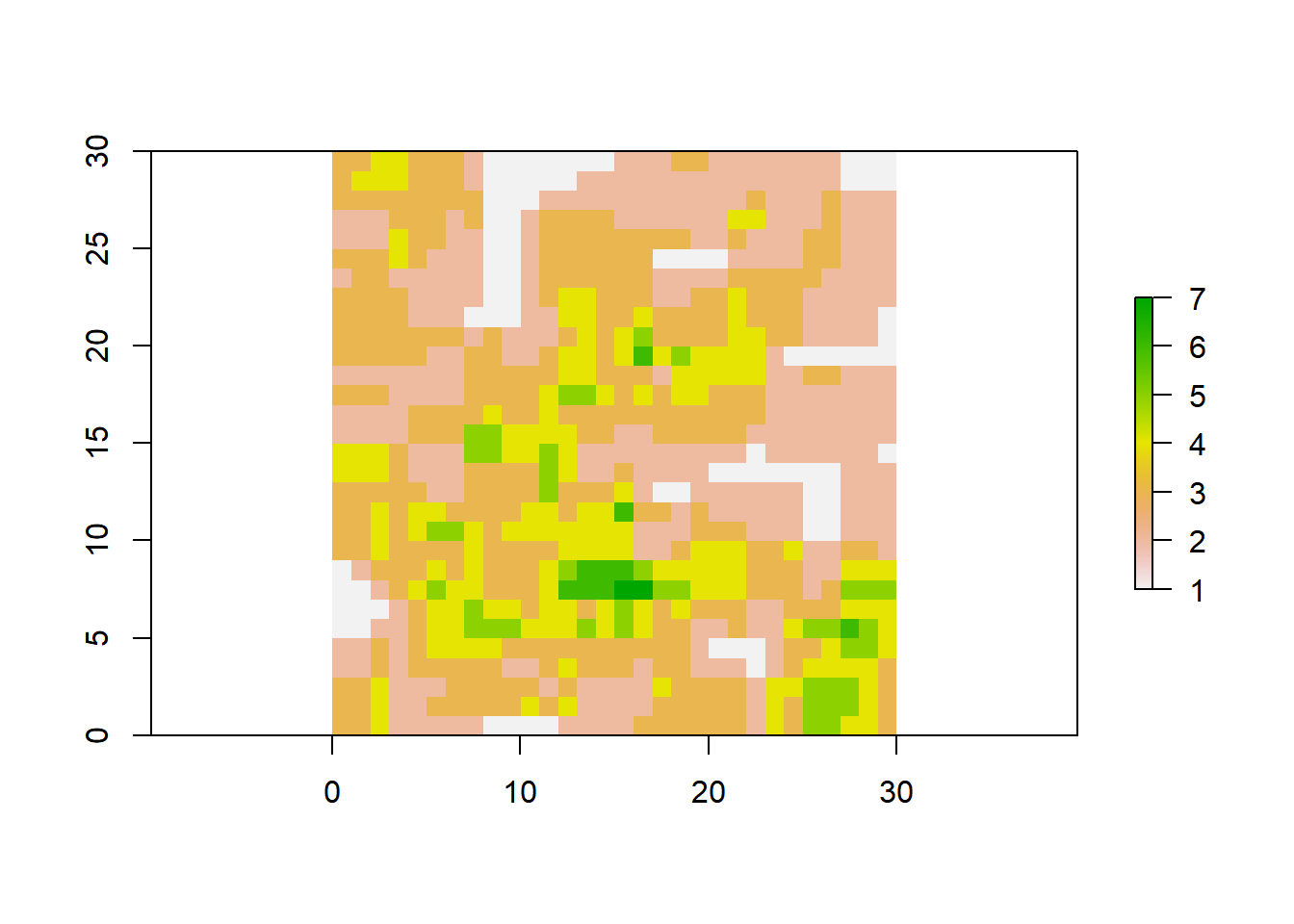

Raster files are a great source of information, in our studies, they will determine the landuse type in each grid cell. Think of it as a large area made of a lot of squares, those squares will have a code (numbers) that tell the computer and us this has water, this has grassland, this has forests, etc.

GIS is one of the most used software for spatial analysis as it has a great interface for us to work with. Nevertheless, it is not the only one, R in the last few years is improving rapidly and is looking to implement better functions that can make analysis easier, wider, and above all reproducible. No matter your skills if you got R and the required packages you will be able to run what other people, improve and modify to your needs.

In order to use only R-studio for spatial analysis we will use, the package raster, it was created in R a long time ago and is one of the most solid packages for raster data analysis. We will learn a few functions that we will need when analyzing landscape metrics.

Let’s begin by loading our libraries, as mentioned before you will need to do this every time you restart your project or R.

| |

| |

Everything you are going to do in R needs to be clear meaning that only what you tell the program to do will give you as output. We can begin by looking at our map, therefore, let’s use the builtin function for plotting plot.

| |

To determine the number of cells for each category we will use freq().

| |

Our raster map looks great and has the information that we want, therefore, we can take the metrics from this area.

Landscape Metrics

Landscapemetrics was introduced last year so we can say that it is a new package. It uses the same functions as other existing software to calculate landscape metrics but, it adds the R style that makes it better at integrating large workflows, tidy data format, small cell resolution, parametrization of metrics, and operable across operating platforms. In my personal opinion one package that will not give you headaches.

| |

The function to get the metrics is calculate_lsm() and there are a few options for us to play with.

| |

That is how it works. We got some metrics but what do they mean. You can open the full document of Landscapemetrics here and have a look at everything that the package can do.

First, remembering the names can be boring and complicated so we can take a look at the full list of metrics available in the package

| |

We can get all the metrics for the class, patch or landscape if we set a level. The metrics for all the levels if we only set a type like diversity metric,aggregation metric, shape metric, core area metric, area and edge metric, or complexity metric. If you leave them empty then you will get all the metrics for each map. The last may take a while it will depend on the capacity of your computer and how many maps you want to analyze.

| |

The workflow for this package is straightforward, we are able to get a lot of information for analysis and visualization

Utility functions

As mention by the authors, this part is one of the biggest differences over other software. We can visualize, extract, sample different areas for further analysis.

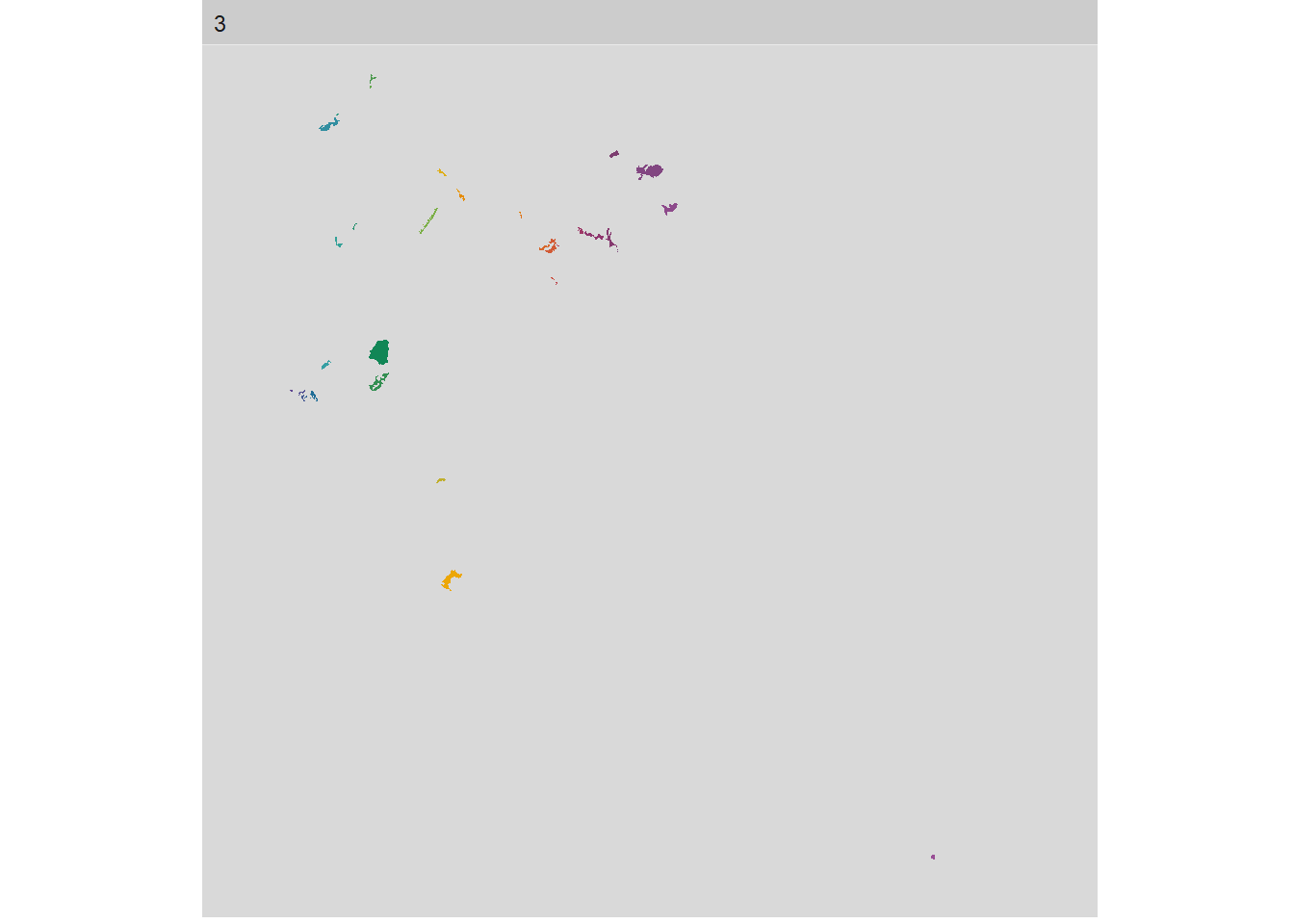

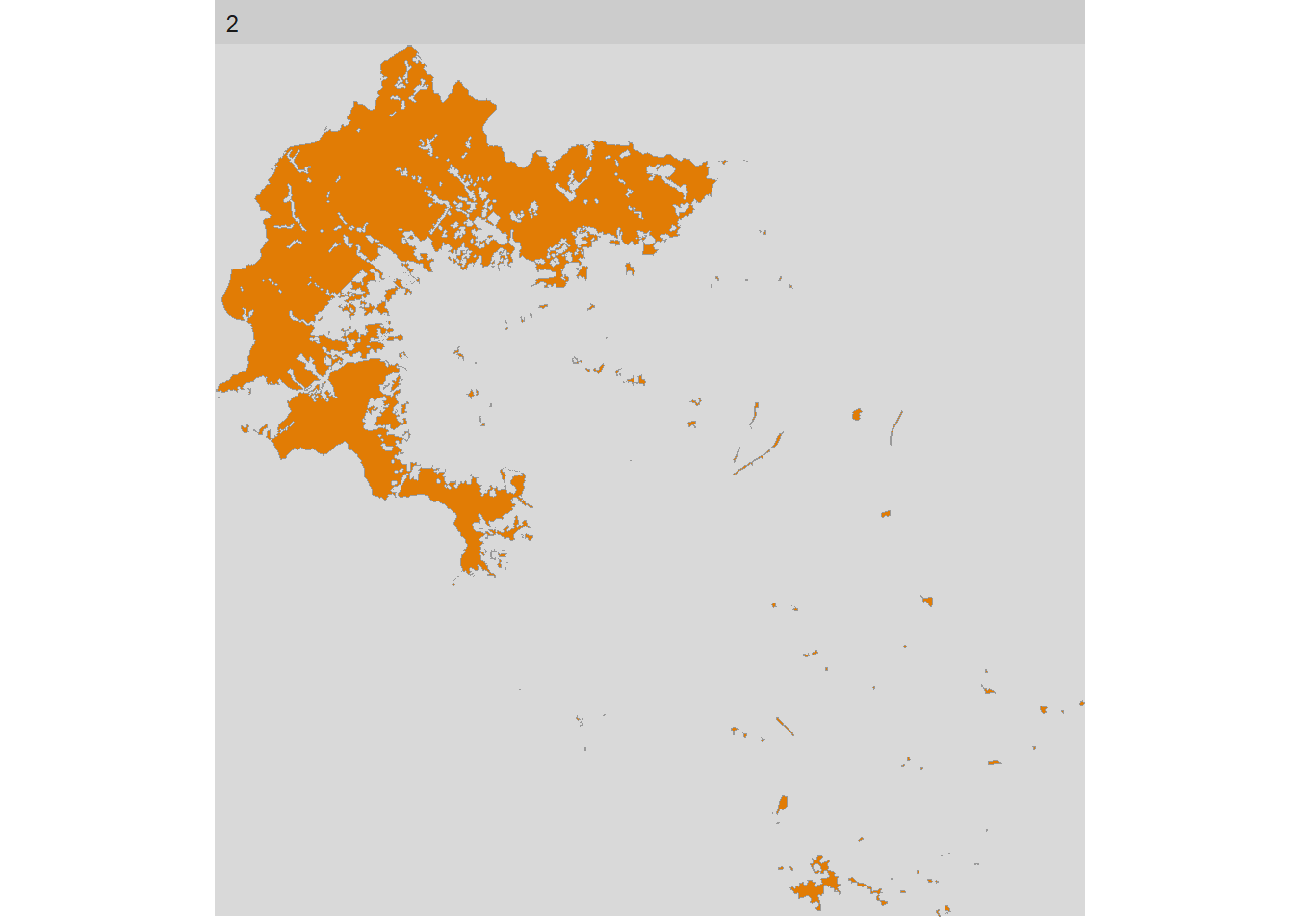

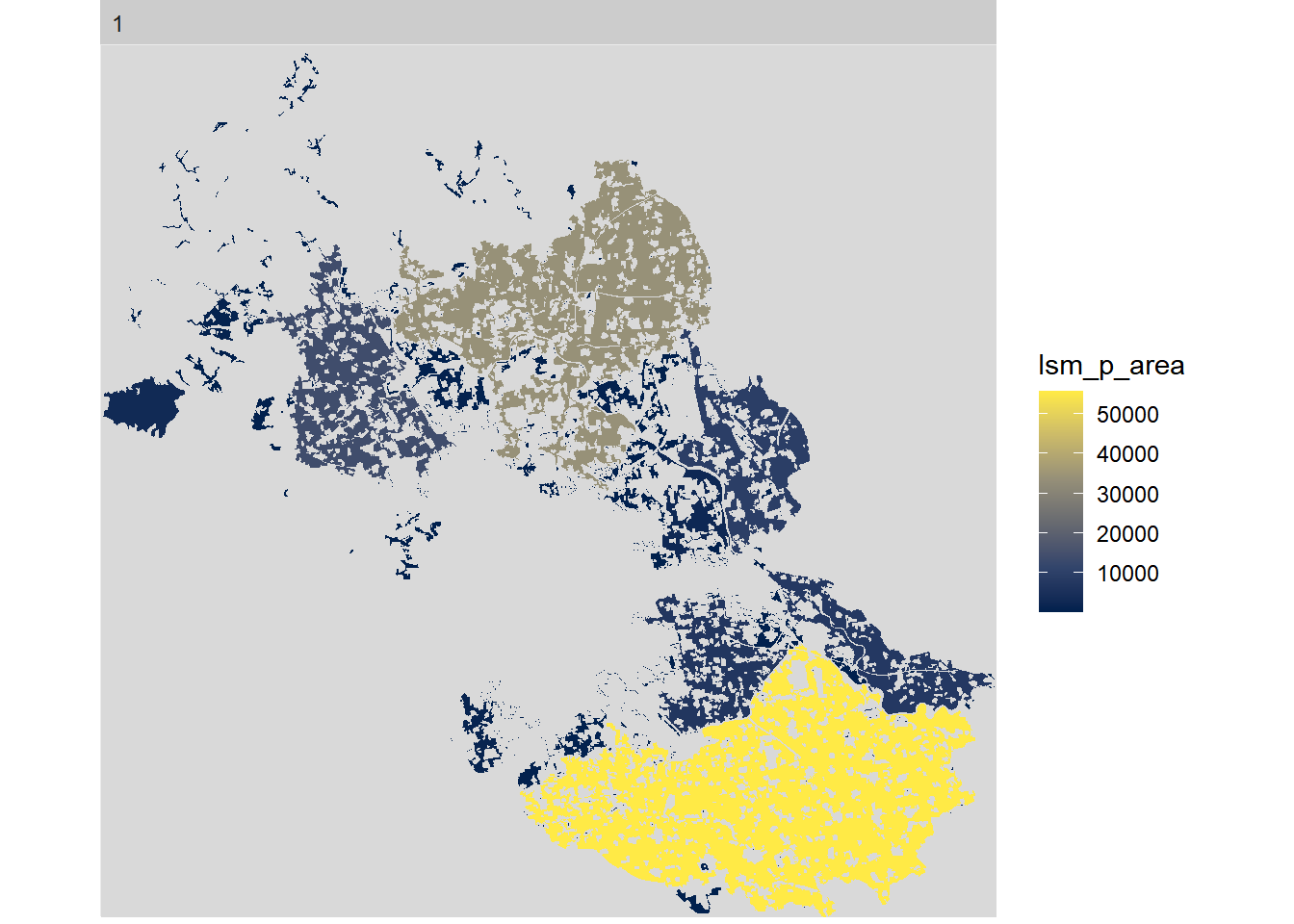

Visualization: Levels and Metrics

If we got all the information in one plot then it is hard to look at each class change and smaller changes are not obvious for the readers. The package includes a function that gives you the tools to select different level, class, and even metric. This is a great option to give a graphic meaning to our calculations. All will follow the structure show_

| |

| |

| |

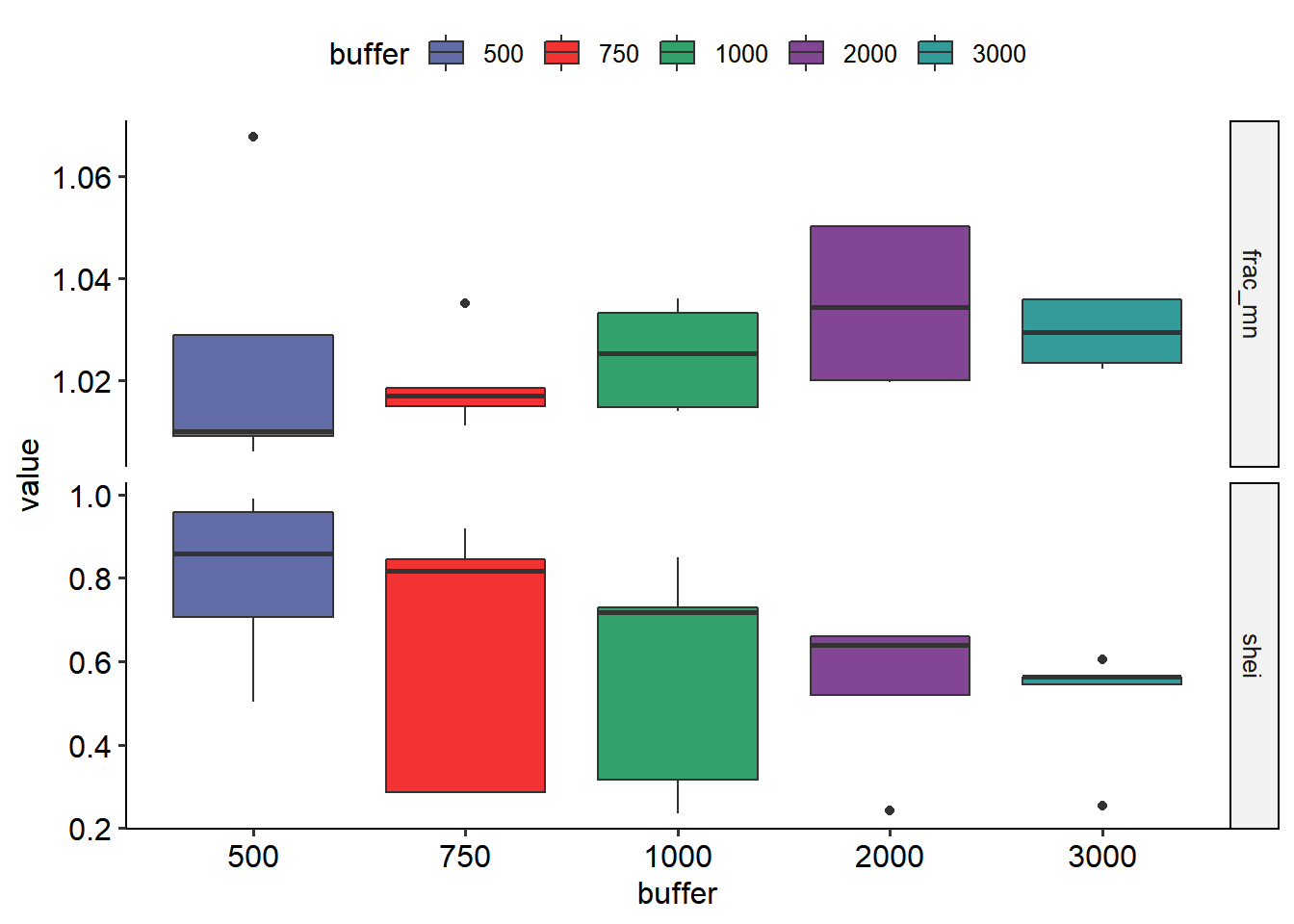

Buffer analysis

The first map was coded the right was for us, sometimes you may not get it that way and you will have results that are not right. let’s take a map with crs in latitude and longitude coordinates and get it ready for buffer analysis

| |

Moving window analysis

This will show a gradient of variability in the landscape. The analysis will go cell by cell, like a scanner, to determine how the center or focal cell changes in comparison to the neighboring cells. How different the surroundings are will give a higher value in that region and cell.

Large maps (many cells) will take a while to determine this function so we will just a small representation so you can get the results in a few seconds.

| |

Now lets plot the result and export the values if needed.

| |

You can export the results after making a matrix matching the size of the raster file.

| |

These are the main functions of the package landscapemetrics, there are a lot more that you can explore in your own time. The results are clear and the structure is perfect for graphs, yet, we can make a few changes so they can be more informative to everyone and have great communication documents. Next, we will cover some other functions of R, data changes, graphs that we can carry out with these results.

Beyond the basic

Revalue and export

Let’s give the right names to our layers and classes.

| |

data_frame $ variable means in the data_frame work in the x variable. Remember that we use

<-to store information so if we use the same name it will replace the old values. If we give a new name then we will have a new variable in that data set.Revalue your observations with the structure c(“old name”=“new name”) for as many different names you want to give.

We can reshape our data frame by metrics if you want to write tables with metrics as the columns and export that information.

| |

Export the data as a CSV file

| |

Latitude and longitude maps

As aforementioned, if our data is in degrees then we must transform the raster cells into meters. We covered buffer analysis, now, let’s deal with a more complex example doing multiple buffer sizes from a map and locations given to you in degrees.

First, both points and maps need to be in the same coordinates otherwise your sampling areas will not be accurate. For the sampling locations, we will cover two options, change the coordinates of the matrix, and then proceed to do the buffer analysis. Second, use the write function to set a gpkg file containing our points.

| |

The current map CRS

| |

Get a CRS in m for your map and then use projectRaster function to project and save the map.

| |

It is more likely to have sampling points in degrees therefore, we will need to give the CRS used to get those points then change that to the map CRS.

| |

A great option is to write a gpkg file for all your sample points.

| |

Great, both the map and the data are in the same units and projection so we can do the analysis for multiple buffer sizes.

| |

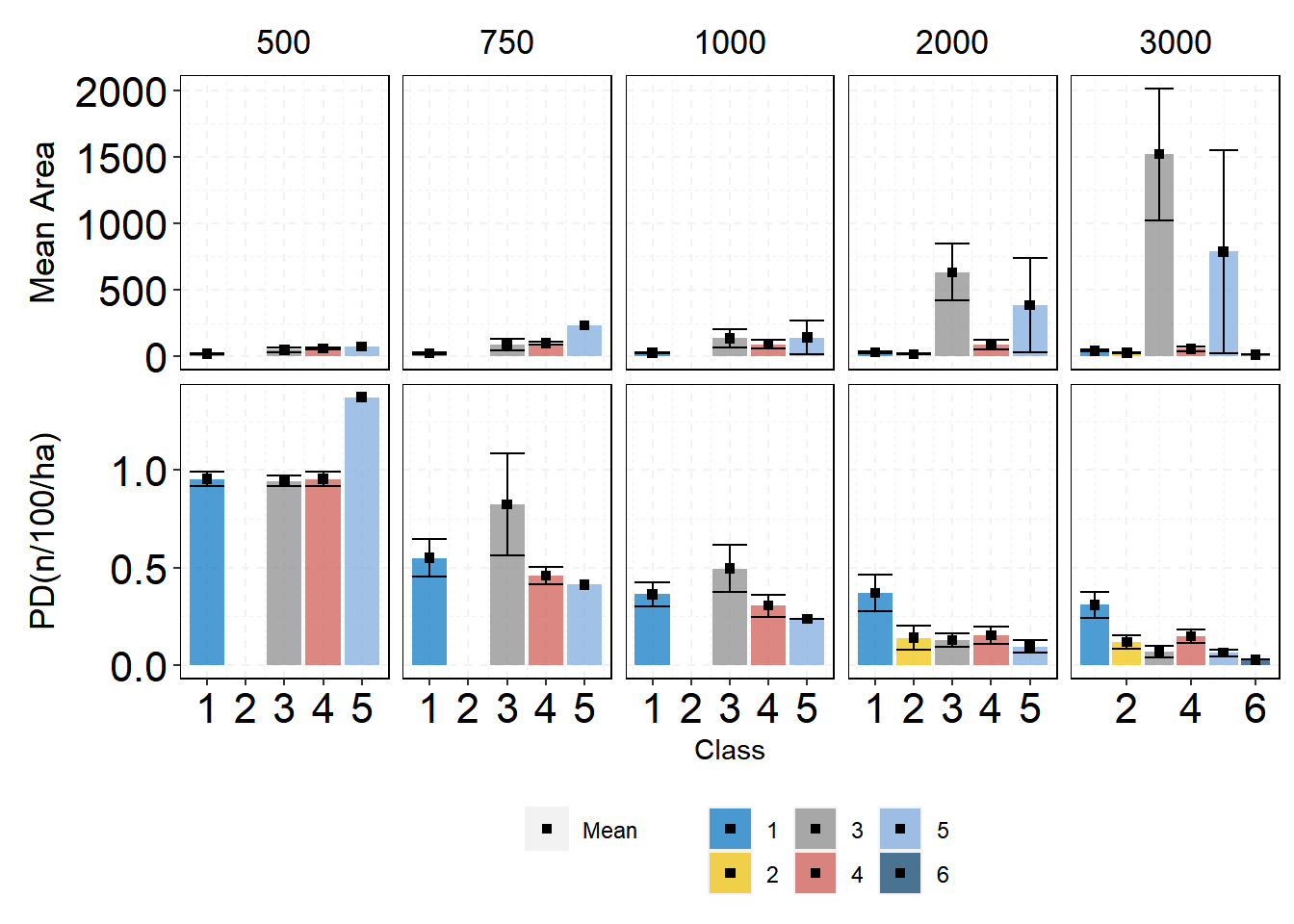

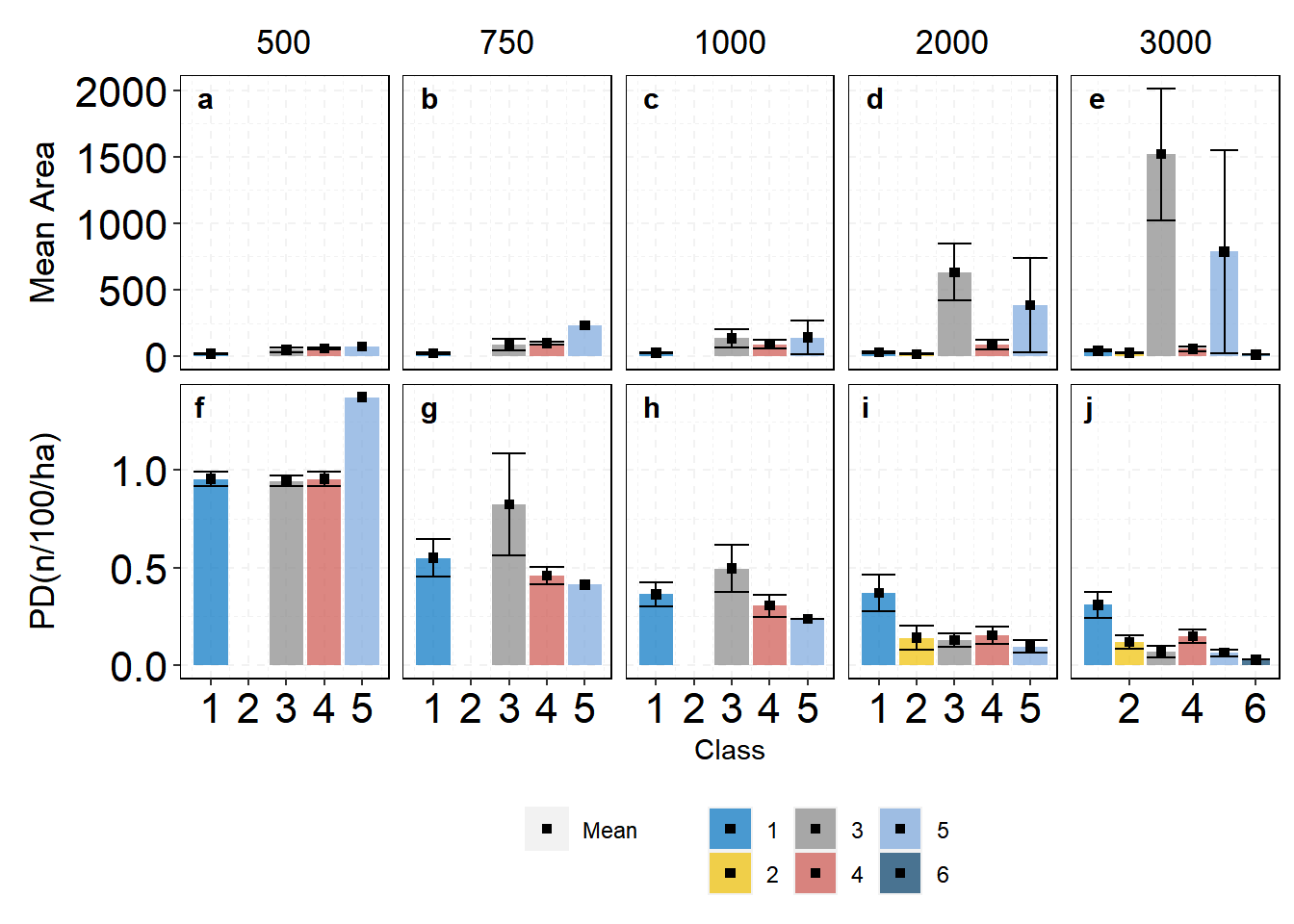

Use R graphics

R and the package ggplot2 are one of the best teams when we want to create incredible graphs. There are multiple reasons to use this package so this part will show you how to set the data and create great plots without the need to use other programs that can be simple but not reproducible.

| |

Change the classes and map.

| |

Dplyr, similarly, to ggplot is part of the Tidyverse packages collection, and it is a package with a lot of functions created to restructure, modify, and arrange your datasets.

The functions have verbs that are easy to remember like filter summary group_by select we do not need to read the documents to know what they do. Let’s use those functions to get our plots. When using these functions you can also pipe both data and ggplot2 with %>% so you do not need to have one variable for the data set.

| |

Now we will use more functions of dplyr for this plot.

| |

The plot is ready and we need to add some tags inside each facet and export it.

| |

To export plots at a great resolution you will need the following lines of code.

| |